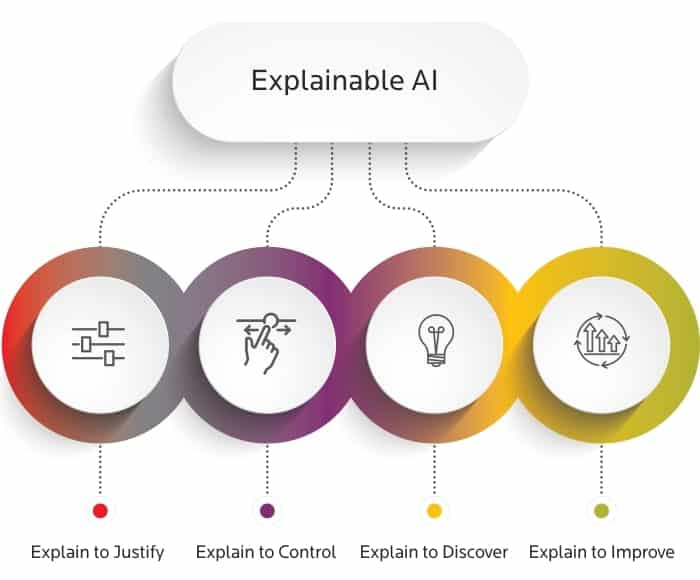

Explainable AI (XAI) is revolutionizing the way we interact with artificial intelligence, making it more transparent and trustworthy. XAI refers to AI systems that can describe their purpose, rationale, and decision-making process in a way that the average person can understand. It's like giving AI a voice to explain itself, bridging the gap between complex algorithms and human comprehension.

Why XAI Matters

In today's AI-driven world, XAI is becoming increasingly crucial for several reasons:

- Building Trust: By providing clear explanations for AI decisions, XAI helps users feel more confident in relying on AI systems, especially in high-stakes areas like healthcare and finance.

- Enhancing Accountability: XAI allows us to identify and address potential biases or errors in AI models, ensuring more responsible and fair use of technology.

- Improving Decision-Making: With XAI, humans can make more informed decisions by understanding the reasoning behind AI recommendations.

- Regulatory Compliance: As AI becomes more prevalent, XAI helps organizations meet growing regulatory requirements for transparency in AI systems.

- Accelerating AI Development: By understanding how AI models work, developers can optimize and improve them more effectively.

How XAI Works

XAI employs various techniques to make AI systems more interpretable:

- Transparency: XAI models are designed to be open and understandable, allowing users to peek into the decision-making process.

- Interpretability: This ensures that the information provided by the model is meaningful and accessible to users.

- Visualization: XAI often uses data visualization techniques to represent complex AI decisions in a more digestible format.

Real-World Applications of Explainable AI

Explainable AI (XAI) has found numerous real-world applications across various industries, enhancing transparency and trust in AI-driven decision-making processes. Here are some notable examples:

Healthcare

- AI-powered cancer detection systems provide visual explanations highlighting suspicious regions in medical images, allowing radiologists to validate findings more effectively.

- XAI assists in treatment planning by explaining the reasoning behind medication or therapy recommendations based on patient data points.

- In intensive care units, XAI-enhanced monitoring systems explain predictions of potential complications, enabling medical teams to take preventive action.

- XAI tools help in diagnosing diseases like COVID-19 by providing post hoc explanations of model outputs.

Finance

- Banks use XAI for fraud detection, explaining suspicious transaction patterns in real-time.

- XAI enhances transparency in loan approval processes, providing clear explanations for loan denials and ensuring fair lending practices.

- In credit risk assessment, XAI helps in understanding the factors influencing credit decisions.

Automotive

- XAI explains decision-making processes in autonomous vehicles, improving safety and building trust among passengers.

- It provides insights into lane changes and emergency maneuvers in self-driving cars.

Human Resources

- XAI tools reveal potential biases in AI-driven hiring algorithms, ensuring fair recruitment practices based on merit.

Environmental and Agricultural Management

- XAI provides insights into groundwater quality monitoring, helping focus efforts on improving critical features.

Cybersecurity

- XAI enhances intrusion detection systems by adding reliability and trust to machine learning models.

Marketing and Sales

- XAI improves customer segmentation, sales forecasting, and ad targeting by making AI-driven insights more understandable.

- It assists in churn prediction by providing insights into factors leading to customer attrition.

Education

- AI-based learning systems use XAI to offer personalized learning paths, helping educators understand how AI analyzes students' performance.

Challenges in Implementing Explainable AI

Implementing Explainable AI (XAI) presents several significant challenges:

Trade-offs Between Performance and Transparency

One of the primary challenges in XAI is balancing model accuracy with interpretability. Complex AI models, particularly deep neural networks, often function as 'black boxes,' making their decision-making processes difficult to understand. While simplifying these models can improve transparency, it may come at the cost of reduced performance, especially in tasks requiring subtle pattern recognition.

Technical Complexity

Developing XAI systems requires sophisticated architectures that maintain high performance while generating human-interpretable explanations. This added complexity increases:

- Computational power requirements

- Training data needs

- Development time

- Expertise needed for deployment

Integration with Existing Systems

Incorporating XAI into established IT infrastructures poses significant technical hurdles:

- Data compatibility issues, as legacy systems often store information in non-standardized formats.

- Complexity of existing IT architectures, requiring careful consideration of how XAI components will interact with established workflows.

- Security concerns, as legacy systems may lack modern API interfaces or secure data exchange protocols necessary for safe AI implementation.

Human Factors

XAI implementation demands meaningful human involvement throughout the development process:

- Technical teams must collaborate closely with domain experts and end-users.

- Ensuring that generated explanations are not just technically sound but useful and actionable in real-world contexts.

- Addressing potential human biases in interpretation and decision-making.

Regulatory and Ethical Considerations

As AI becomes more ingrained in society, regulatory challenges emerge:

- Compliance with evolving AI regulations, such as the European Union's AI Act.

- Balancing transparency requirements with the need to protect proprietary algorithms and maintain commercial advantage.

Data Quality and Bias

The effectiveness of XAI systems is heavily dependent on the quality and representativeness of training data:

- AI algorithms can only provide answers as good as the data they've been trained on.

- Incorrect or biased training data can lead to flawed or unfair decision-making processes.

How Explainable AI Contributes to Regulatory Compliance

Explainable AI (XAI) plays a crucial role in ensuring regulatory compliance across various industries by promoting transparency, accountability, and fairness in AI-driven decision-making processes. Here's how XAI contributes to regulatory compliance:

Transparency and Understanding

XAI makes AI decision-making processes transparent and understandable, which is essential for meeting regulatory requirements. This transparency allows organizations to:

- Justify decisions made by automated systems to stakeholders and regulators.

- Provide clear explanations for AI-driven outcomes, especially when they affect individuals' rights.

- Demonstrate that AI systems are understood and justified, as required by regulations like the EU's GDPR and the proposed AI Act.

Bias Detection and Mitigation

XAI helps organizations identify and mitigate bias within their AI systems, which is crucial for complying with fairness regulations. By using XAI techniques, developers can:

- Examine biases in their models and adjust them accordingly.

- Ensure AI systems do not discriminate based on protected characteristics.

- Implement fairness constraints to treat all users equitably.

Accountability and Traceability

XAI enhances accountability by providing insights into how algorithms arrive at specific outcomes. This contributes to regulatory compliance by:

- Enabling the creation of activity logs for high-risk AI systems, as required by the EU AI Act.

- Allowing for regular reviews to ensure automated decision-making meets intended use and guidelines.

- Facilitating audits of AI models for biases and fairness.

Building Trust and Reducing Disputes

By making AI decision-making processes more transparent, XAI helps build trust with consumers and stakeholders. This trust-building aspect:

- Reduces the likelihood of disputes.

- Fosters customer confidence, which is essential for regulatory compliance.

- Enables organizations to provide clear reasons for decisions, such as loan approvals or denials.

Meeting Specific Regulatory Requirements

XAI helps organizations comply with specific regulations across different sectors:

- In finance, it aids in meeting requirements set by the FCA for transparency in decision-making processes.

- For employment practices, it helps comply with US Equal Employment Opportunity Commission (EEOC) standards by examining how AI impacts hiring decisions.

- In high-risk AI applications, it supports compliance with the EU AI Act's requirements for explainability and transparency.

Adapting to Evolving Regulations

As AI regulations continue to evolve, XAI positions organizations to adapt more easily by:

- Providing a framework for explaining AI decisions, which is likely to be a key component of future regulations.

- Enabling organizations to get ahead of regulatory trends by proactively implementing transparent AI models.

- Helping balance the need for explainability with protecting proprietary technology.

The Future of XAI

As AI continues to evolve and integrate into our daily lives, XAI will play an increasingly important role. It's not just about making AI smarter; it's about making it more human-friendly and aligned with our values. By fostering trust, accountability, and understanding, XAI is paving the way for a future where humans and AI can work together more harmoniously and effectively.

In conclusion, Explainable AI is more than just a technical advancement – it's a bridge between the complex world of AI and human understanding. As we continue to push the boundaries of what AI can do, XAI ensures that we don't lose sight of the importance of transparency and trust in our relationship with technology.